Please comment on the blogger comment section to improve the doc. Much thanks!

(For large screen avoiding the mangling by blogger, view the working version of this file in github.)

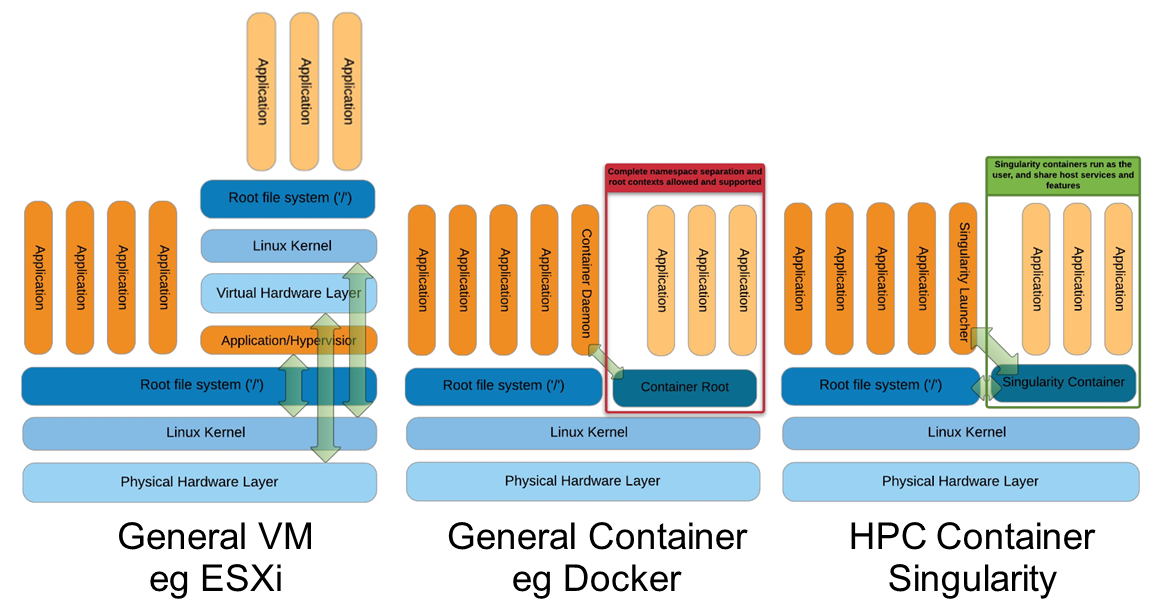

Oveview of VM vs Docker vs Singularity

Source: Greg Kurtzer keynote at HPC Advisory Council 2017 @ Stanford

Tabular comparison

| Docker | Singularity | Shifter | UGE Container Ed. | |

|---|---|---|---|---|

| Docker | Singularity | Shifter | UGE Container Ed. | |

| Main problem being addressed | DevOps, microservices. Enterprise applications |

Application portability (single image file, contain all dependencies) Reproducibility, run cross platform, provide support for legacy OS and apps. |

Utilize the large number of docker apps. Provides a way to run them in HPC after a conversion process. It also strip out all the requirements of root so that they are runnable as user process. |

Running dockers containers in HPC, with UGE managing the docker daemon process (?) |

| Interaction w/ Docker |

Singularity work completely independent of Docker. It does have ability to import docker images, convert them to singularity images, or run docker container directly |

Shifter primary workflow is to pull and convert docker image into shifter image. Image Gateway connects to Docker Hub using its build-in functions, docker does not need to be installed. |

||

| User group of primary focus | Developers/DevOps | Scientific Application Users | UGE users | Use Case Focus | microservices. Do one small thing alone but does it well. Often daemon listening on TCP port 24/7 | Images that target a single application or workflow (often with very complicated stacks), software that has difficult dependencies (e.g. old or different library versions) |

| Examplifying Use Scenario |

A trio of container to host a blogging site: One docker container running httpd listen to a mapped TCP port 80 A second container running a MySql DB Finally a WordPress container running on top taking user requests |

|

A validated image containing high energy particle physics workflow. | |

| Portability | Docker Hub |

Single image file. Container purpose build for singularity. Can import docker images. Working to accept docker file or Rocket definition syntax as Singularity definition. Singularity Hub host a repository of images and definition files |

Image Gateway pulls from Docker Hub, automatically convert to shifter image. | |

| Version | 1.15-1 | 2.2 Documentation is decent, with many community articles popping up as well. |

16.08.3 (pre-release) Documentation is work needing progress :). |

8 |

| Host OS Req |

Linux kernel 3.10+, eg: RHEL 7 Debian 8.0 Ubuntu 12.04 SUSE Linux Enterprise 12 other |

RHEL 6 Ubuntu ... |

Cray Linux Environment Linux with kernel 2.6.25+, eg RHEL 6. ... |

RHEL 7 Newer version of Linux that supports Docker/cgroup. |

| Run different OS | Yes. docker pull centos/ubuntu/etc |

Yes. Utilize commands like debootstrap and febootstrap to bootstrap a new image | Yes | |

| Guiding principle | Make container as transparent as possible to user. Run as much as possible as normal user process, change environment only enough to provide portability of the application | Leverage Docker as it is well established. Extract Docker image into squash-FS, run in chroot env | ||

| Scheduler | Docker Swarm | Agnostic, run singularity as a job. Works well with Slurm, UGE, etc. | Can work as stand alone container environment. For scalability, scheduler integration currently exist for SLURM, via SPANK plugin. (Should work with other scheduler) | qsub -l to request docker boolean resource. UGE will be able to assign different priorities to diff containers |

| Resource Orchaestration | SwarmKit provided by Docker | Scheduler's job to spin multiple instances on multiple nodes | Yes. salloc -N4 ... srun ... |

|

| Namespace isolation paradigm | By Default, share little. Process, Network, user space are isolated by default, but can be shared via cli arg PID 1 is not init, but the process started by docker. User inside container cannot see hosts' processes. Network typically NAT'ed |

HPC workflow doesn't benefit much from process isolation, thus by default share most everything, so process running inside container is largely the same as running on actual host. But process namespace isolation can easily be enabled. Default share Process Namespace, username space (user inside container can kill process on host) While inside a singularity shell, ps -ef shows all process of host, or those of other containers. container user can kill PID of process on host. User does not change ID inside container, cannot become root from inside the process. Network is transparent to the process. /home mount is made available in container, -B will add additional bind mounts easily. |

Supported | |

| Resource restriction via cgroup? | docker run -c ... -m ... --cpuset ... | Not touched by Singularity. Scheduler/Resource Manager to control what is available to Singularity | Let SLURM manage cgroup restrictions | cgroups_params in qconf -mconf |

| MPI |

MP-what? :-) |

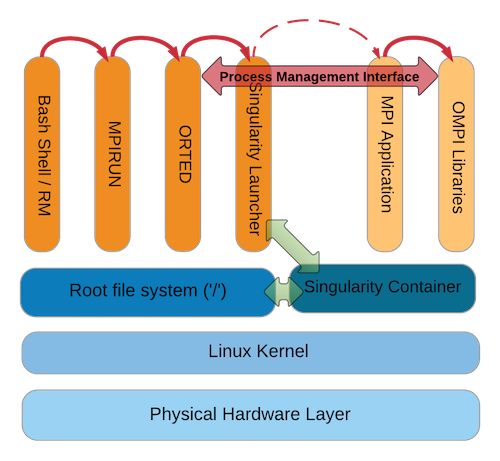

Singularity has build-in support for MPI (OpenMPI, MPICH, IntelMPI). Once app is build with MPI libraries, execute mpirun as normal, replacing the usual binary with the single file singularity app. While the app is running inside the singularity container, Process Management Interface (PMI/PMIx) calls will pass thru the singularity launcher onto ORTED. This is what MPI is designed to do, no hacks, so works well. |

Shifter relies on MPICH Application Binary Interface (ABI). Apps that use vanilla MPI that is compatibile with MPICH would work. Site-specific MPI libraries need to be copied to the container at run time. LD_LIBRARY_PATH need to include for example /opt/udiImage/... |

|

| MPI eg |

mpirun singularity exec centos_ompi.img /usr/bin/mpi_ring

|

|||

| IB | Likely ok for IPoIB. Probably no support for RDS. | Native access | ||

| GPU | Your app should run head-less, why you trying to access video card? :-) | Native access, native speed. See NIH's page for setup | In progress. Reportedly getting near native performance | |

| Network access | Utilize Network Namespace. NAT is default | Transparent. Access network like any user process would. | Transparent? | |

| Host's device access | /dev, /sys and /proc are bind mounted to container by default. | /dev, /sys and /proc of host show up inside container | ||

| Host's file system access | docker run -v hostpath:containerpath will bind mount to make host's FS accessible to container. container can be modified and saved. root access possible. |

Singularity app run as a user process, therefore it has access to all of host's fs and devices that any user process have access to, including specially optimized FS like Lustre and GPFS. Singularity performs a bind mount between host's mount to the inside of the container. -B /opt:/mnt will bind mount host's /opt to container's /mnt Container writable if started with -w. Root access possible if singularity is run by root |

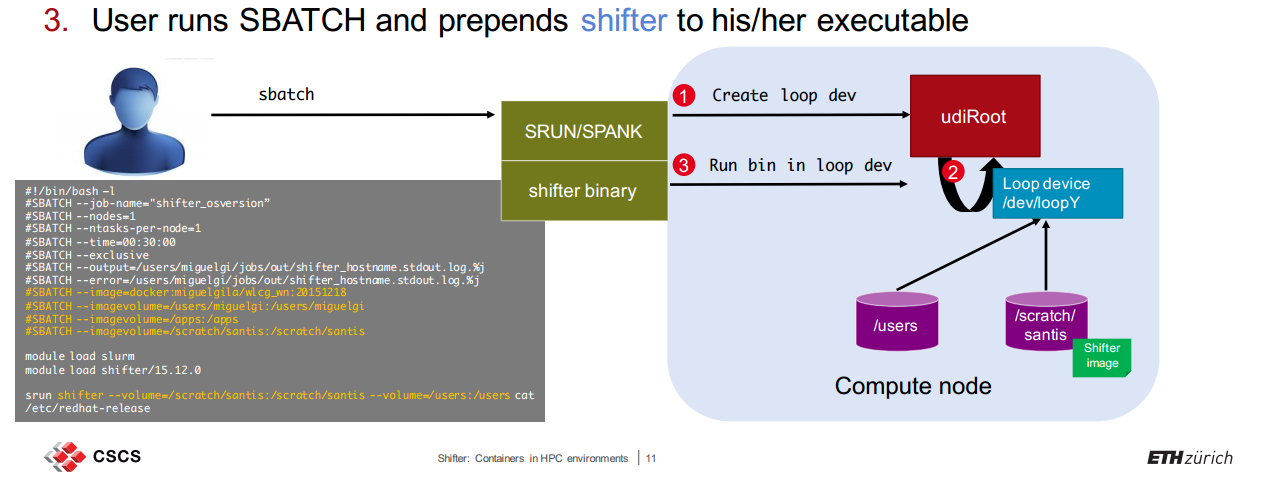

Start shifter with volume mapping, done using loop dev. User has access to all typical mounts from host. Allows mounting a localized loop mounted file system on each node to act as a local cache, especially useful in diskless cluster. |

qsub -xdv to map host's FS to container |

| Data persistence in image |

|

|

? | |

| Deployment |

Single package install. Daemon service with root priviledge Use in batch processing environment left as an exercise to the reader |

root to install singularity, sexec-setuid need setuid root to work correctly. Single .rpm/.deb per host. No scheduler modification needed. |

Shifter need to be installed in all hpc nodes Docker NOT needed in hpc Need an ImageGateway |

Upgrade to new version of UGE Head and compute nodes need to be newer version of Linux that supports Docker (eg RHEL 7). |

| Container activation | Docker daemon running on each host | loopback mount on singularity image file to utilize container. No daemon process. | No docker daemon on CN. srun ... loopback mount | May employ a set of nodes with running docker daemon, such nodes are flagged as providing a boolean resource for job to make request against. (?) |

| Container build-up |

|

|

|

|

| Security |

User running docker commands need to be in special docker group to gain elevated system access |

No change in security paradigm. User run singularity image/app without special privileges. Option to start only root-owned container image. |

root to install shifter binary. Need special integration into scheduler (or only in cray cuz they are special?). User run shifter image/app without special privileges. |

|

| Root escalation | container starts with priviledged access |

To obtain root in singularity container, it must have been started as root ie, root can be had via sudo singularity shell centos7.img Container started as normal user cannot use su or sudo to become root; singularity utilize kernel's NO_NEW_PRIV flag (Kernel 3.5 and above). ie, /bin/su (and /bin/sudo), will have setuid flag, but it is blocked from the inside by singularity |

? | |

| Env |

|

|||

| User management | ? | Yes. Container has sanitized passwd, group files | ||

| Sample User Commands |

sudo docker pull httpd:latest docker images -a sudo docker run -p 8000:80 httpd |

sudo singularity create ubuntu.img sudo singularity bootstrap ubuntu.img ubuntu.def singularity exec ubuntu.img bash ./ubuntu.img # the %runscript section of the container will autoamtically be invoked |

module load shifter shiftering pull docker:python:3.5 # pull docker image shifterimg images # list images shifter --image=python:3.5 python # execute a container app module load slurm sbatch --image=docker:python:3.5 shifter python # run as job |

|

| Unix pipes |

output of container captured by pipe. eg: docker run centos:6 ls /etc | wc However, docker won't take standard input, at least not for 1.5-1. The follwing does not work as one might expect: echo 'cat("Hello world\n")' | docker run r-base:latest R --no-save |

can daisy chain singularity apps like regular unix commands. eg echo "echo hello world" | singularity exec centos7.img bash |

Shifter will capture standard input as well inside an sbatch script: echo 'cat("Hello world\n")' | shifter R --no-save |

|

| Performance | Docker container startup time is much faster than traditional VM, as it does not need to emaulate hardware and is essentially just starting a new process. |

Container startup time a bit faster than docker, as it does away with much of the namespace settings. On Lustre backed system, where metadata lookup span different server than data block lookup, single file container image significantly improve performance by reducing multitude of meta data lookup with the MDS. |

On Lustre backed system, where metadata lookup span different server than data block lookup, single file container image significantly improve performance by reducing multitude of meta data lookup with the MDS. | |

| Misc |

Singularity app can run outside HPC, without any job scheduler. It can serve as a container for app portability outside the HPC world, and since a single file encompass the whole container, this maybe advantageous for sharing, portability and archive. Singularity recommends compiling all apps into the Singularity container, spec files would need to be written. I hear they are looking to adopt Docker and/or RKT specfile to minimize this burden. Docker files can be imported or even run directly. Given docker operate on a different paradigm, the hit-and-miss outcome of such conversion is understandable. In future development, maybe NeRSC's shifter could run singularity app as well. |

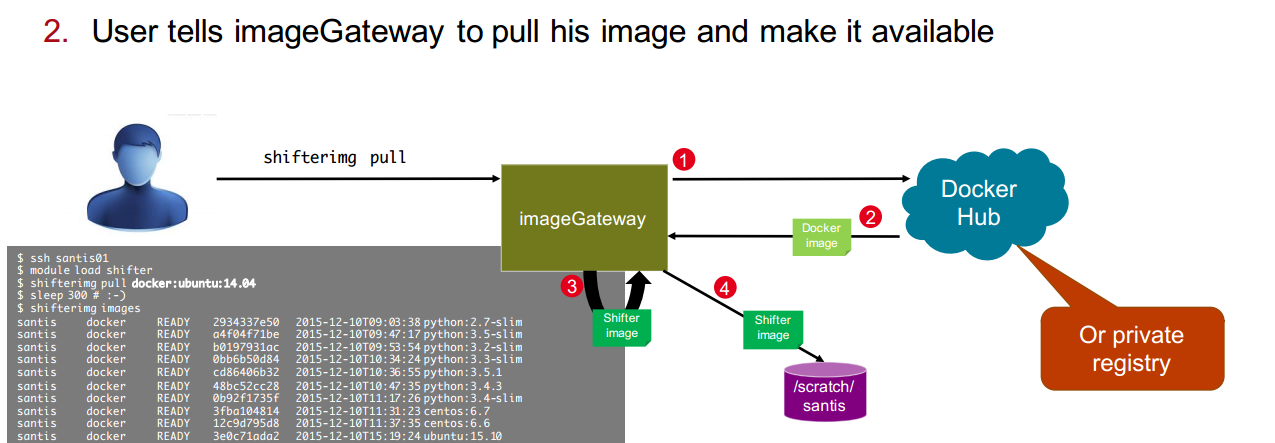

Shifter container/app can run as stand alone outside HPC scheduler. This is certainly useful for testing and development. However, if one isn't trying to utilize container in HPC, maybe there is not much point in converting docker images to shifter images. Shifter works by pulling the large number of existing containers. Docker isn't needed inside the HPC, some gateway location can be used for Shifter to pull docker images and store converted image in a location accessible cluster-wide, eg /scratch. See shifter workflow below for more details. |

||

| Feel | High :) | Building a singularity 2.2 image feels a lot like building a VM using kickstart file. | ||

| Adopters | Like wildfire by internet companies | UC Berkeley, Stanford, TACC, SDSC, GSI, HPC-UGent, Perdue, UFL, NIH, etc. Seems like the non-cray community is gravitating toward Singularity. |

NeRSC, VLSCI, CSCS, CERN, etc. Seems like the big physics number crunchers are gravitating toward shifter, cuz they use Cray or IBM Blue Gene? |

|

| Product integration and support | nextflow | Qluster, nextflow, Bright Cluster Manager, Ontropos, Open Cloud/Intel Cluster Orchestrator (in discussion). | Cray | |

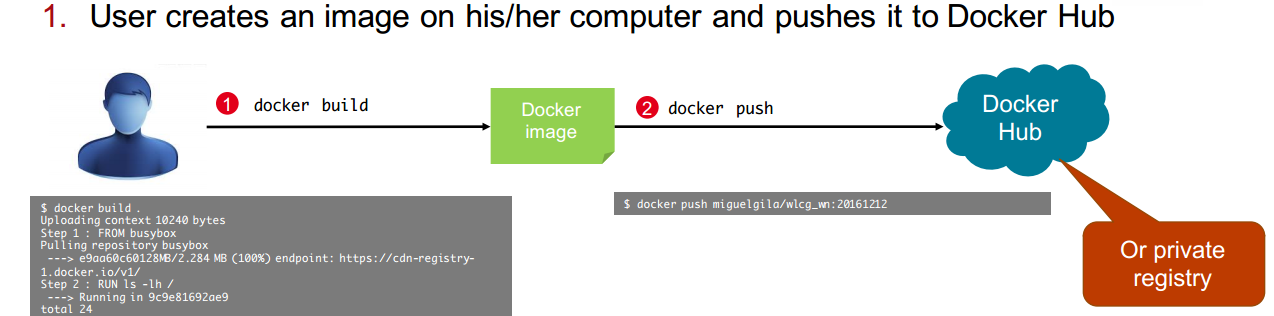

Shifter Workflow

As per CSCS/ETH slide share, based on Shifter 15.12.0.

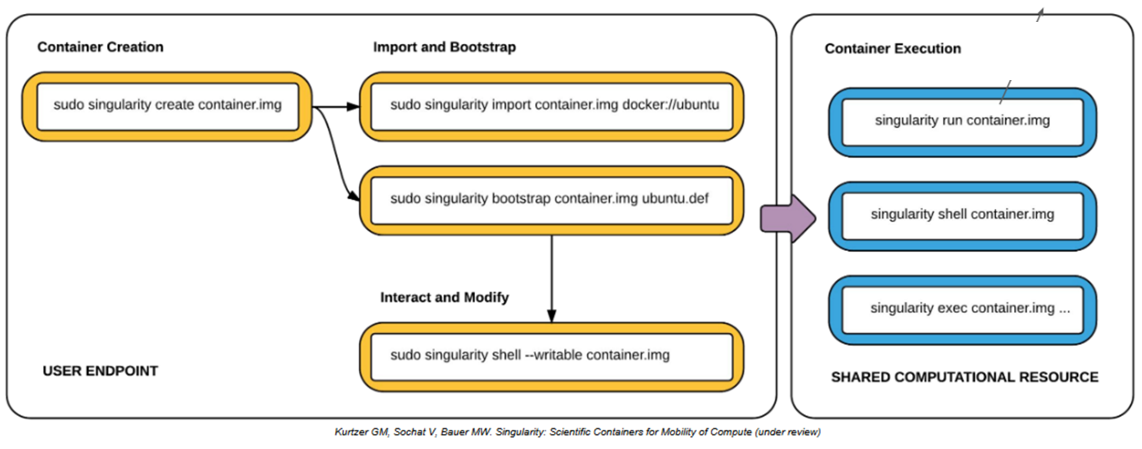

Singularity Workflow

As per Greg Kurtzer keynote at HPC Advisory Council 2017 @ Stanford, based on Singularity 2.2.

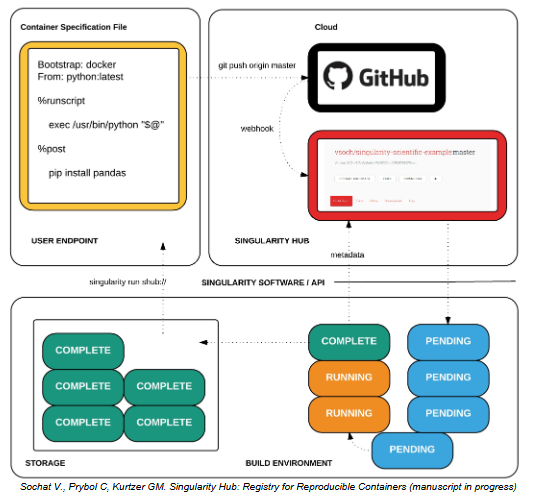

Workflow with Singularity Hub

MPI in Singularity

Example Commands in Docker

# get a container with anaconda python and jupyter notebook docker run -i -t -p 8888:8888 continuumio/anaconda /bin/bash -c "/opt/conda/bin/conda install jupyter -y --quiet && mkdir /opt/notebooks && /opt/conda/bin/jupyter notebook --notebook-dir=/opt/notebooks --ip='*' --port=8888 --no-browser"

Example Commands in Singularity

sudo singularity create --size 2048 /opt/singularity_repo/ubuntu16.img

# Create an image file to host the content of the container.

# Think of it like creating the virtual hard drive for a VM.

# The image created should be a sparse file (ie with holes),

# However, while ext4/ubuntu seems to result in compact file

# RHEL6/ext3 img take up their final file size.

sudo -E singularity bootstrap /opt/singularity_repo/ubuntu16.img /opt/src/singularity/examples/ubuntu.def

# "kickstart" build the container

# If behind a proxy, sudo -E will preserve http_proxy env var

singularity shell ubuntu.def

singularity exec ubuntu.img bash # slight diff than shell, PS1 not changed, .bash_profile sourced here

singularity exec centos.img rpm -qa

# Note that uname and uptime will always report info of the host, not the container.

singularity exec -w centos.img zsh # -w for writable container, FS ACL still applies

# centos.img needs to be writable by the user

sudo singularity shell -w centos.img # root can edit all contents inside container

# best to build up container using kickstart mentality.

# ie, to add more packages to image, vi /opt/src/singularity/exampless/ubuntu.def

# re-run bootstrap command again.

# bootstap on existing image will build on top of it, not overwritting it/restarting from scratch

# singularity .def file is kinda like kickstart file

# unix commands can be run, but if there is any error, the bootstrap process ends.

# pipe and redirect works as input and output for singularity container executions:

echo "echo hello world" | singularity exec centos7.img bash

singularity exec centos7.img echo 'echo hello world' | singularity exec centos7.img bash

singularity exec centos7.img rpm -qa | singularity exec centos7.img wc -l

singularity exec centos7.img python < ./myscript.py

Example Singularity container definition file

CentOS:

# Copyright (c) 2015-2016, Gregory M. Kurtzer. All rights reserved.

#

# "Singularity" Copyright (c) 2016, The Regents of the University of California,

# through Lawrence Berkeley National Laboratory (subject to receipt of any

# required approvals from the U.S. Dept. of Energy). All rights reserved.

BootStrap: yum

OSVersion: 7

MirrorURL: http://mirror.centos.org/centos-%{OSVERSION}/%{OSVERSION}/os/$basearch/

Include: yum

# If you want the updates (available at the bootstrap date) to be installed

# inside the container during the bootstrap instead of the General Availability

# point release (7.x) then uncomment the following line

#UpdateURL: http://mirror.centos.org/centos-%{OSVERSION}/%{OSVERSION}/updates/$basearch/

%runscript

echo "This is what happens when you run the container..."

%post

echo "Hello from inside the container"

yum -y install vim-minimal

# adding a number of rather useful packages

yum -y install bash

yum -y install zsh

yum -y install environment-modules

yum -y install which

yum -y install less

yum -y install sudo # binary has setuid flag, but it is not honored inside singularity

yum -y install wget

yum -y install coreutils # provide yes

yum -y install bzip2 # anaconda extract

yum -y install tar # anaconda extract

# bootstrap will terminate on first error, so be careful!

test -d /etc/singularity || mkdir /etc/singularity

touch /etc/singularity/singularity_bootstart.log

echo '*** env ***' >> /etc/singularity/singularity_bootstart.log

env >> /etc/singularity/singularity_bootstart.log

# install anaconda python by download and execution of installer script

# the test condition is so that subsequent singularity bootstrap to expand the image don't re-install anaconda

cd /opt

[[ -f Anaconda3-4.2.0-Linux-x86_64.sh ]] || wget https://repo.continuum.io/archive/ Anaconda3-4.2.0-Linux-x86_64.sh

[[ -d /opt/anaconda3 ]] || bash Anaconda3-4.2.0-Linux-x86_64.sh -p /opt/anaconda3 -b # -b = batch mode, accept license w/o user input

Ubuntu snipplet. I found that --force-yes is needed

debootstrap rpm is needed in a CentOS machine for this bootstrap to work

%post

echo "Hello from inside the container"

sed -i 's/$/ universe/' /etc/apt/sources.list

# when building from ubuntu, should avoid using --force-yes in apt-get

# but when building from RHEL, had to use --force-yes for it to work (at least in RHEL6)

apt-get -y --force-yes install vim

apt-get -y --force-yes install ncurses-term

Additional singularity .def examples

Readings for Containers in HPC

- State of Linux Container (include comparison with VM) by Christian Kniep in 2017 HPC Advisory Council @ Stanford: video and slides.

- Container meets HPC blog.

Readings for Singularity

- Singularity: Container for Science and reproducibility Video and slides of Keynote HPC Advisory Council 2017 @ Stanford by Greg Kurtzer. It also present the challenges of using Docker in the HPC world.

- Qucik, concise intro on latest Singularity (ver 2.2 era)

- NIH's singularity app page, contains full info on how to use singularity in HPC, with GPU and docker hub.

- Singularity documentation site

- HPC Wire Q+A with main Singularity author Greg Kurtzer

- Admin mag Cover background of container and problem of using Docker in HPC. Also has setup and example run with singularity, but this is for version 1.x of singularity, which is very different than 2.x.

- Motivation and implementation approach presented by Greg on interview with Admin Magazine. It was shortly before the 1.0 release, but desire and approach reflects in the 2.x release as well.

Readings for Shifter

- Shifter presentation at CHEP 2016 by Lisa Gerhardt et al.

- User defined images NeRSC documentation.

- Shifter use cases slide share from CSCS/ETH Zurich

- Container and HPC slide share.

- Shifter vs Docker documentation at VLSCI, with step-by-step instructions on how to obtain and build a shifter app in a SLURM cluster.

- Using MPI in Shifter